Regular readers and Search Marketing customers will already know about Google Panda and Penguin. Panda is the name given to the Google Algorithm update that started in Feb 2011 which was intended to tackle low quality content, Penguin is their update intended to punish sites that rely on low quality links. It seems that around May 2013 these have been integrated into a new algorithm update which appears to combine signals from other filters and further punishes sites that rely on either or both features.

So if you have suffered from Panda and or Penguin, what to do now. A lot of Search Marketeers are scratching their heads at the current time, or worse still are going out of business. There are also plenty of comments around the Search Forums about Brands dominating the results. Our view is that is confusion between cause and effect. Smaller companies are being pushed out, to “reveal” the brands.

Here is what we see and what we think is happening:-

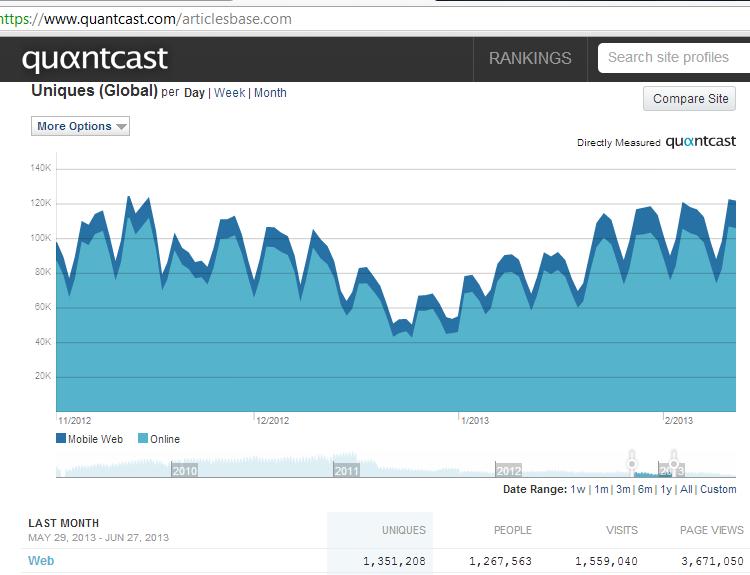

Content – if you site has too high a proportion of low quality content then you can expect to see a hit. See for example the graphs from the following sites:-

Notice the heavy drop in Feb 2011 – that was Panda 1 – this site dropped from $500,000 monthly income down to $100,000 as a result.

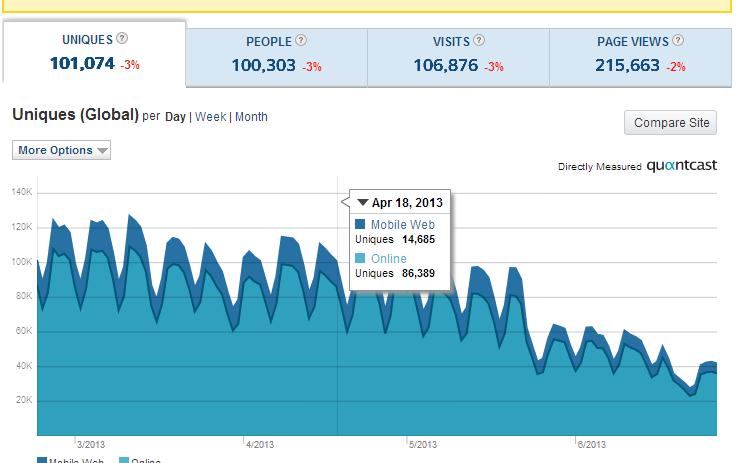

Note how they have been hit again on May 22nd 2013. This strongly suggests that they have content issues. If you read sample articles from the site, the quality is actually really good. But the issue is the uniqueness of the content. Having poor quality content for example spun or content that lacks coherence is a problem but unless your site is significantly unique you are in trouble.

They have aggressively tightened up on the content, and remove articles that didn’t have visitors or poor metrics, but whilst this helped around Christmas 2012 they have been slammed again. See the recovery they achieved by improving the ratio of rich: shallow content as follows:-

This suggests that the approach was right, but now the threshold of rich:shallow content has been tightened up they are hit again.

Penguin – historically brands have a big advantage in that they benefit from the high profile and marketing strength of their corporate owners, so tend not to build low quality links. Due to their high profile and marketing spend, they attract lots of links from reputable places, think of sites like the bbc or the Guardian talking about sites like Amazon. A strong factor in the Google algorithm is the number of new / total referring urls added per month, so for example a site that naturally attracts 100’s or 1,000’s of new links per month is rewarded. Due to the general corporate advertising and marketing branded sites will pick up lots of links from newspapers and magazine related websites, this is an indirect spin off of their market strength, but it does mean they can pick up lots of links without having to pay directly for them.

Smaller companies have had to use lower cost marketing approaches, and to be workable these least in part rely on low quality high volume link building, which replicates the approach of brands but by using techniques such as having lower costs non-native speakers writing their content, and software to automate the distribution of this content, think here of SE Nuke, Article Distribution robots etc.

This worked historically because smaller sites could keep up with the link building of the brands, in number terms. But not in quality terms.

Its the use of this low English reading level content and the lack of uniqueness that is now Google’s target. They are using the Panda algorithm to quality score backlinks and this is the game changing part.

A small site could add 1,000 links per month and score the same in the Algorithm as a brand site picking up 1,000 links. Now the comparison is 1,000 quality links to 1,000 shallow links and it no longer works.

Google issued new guidance recently, take a read of the following:-http://googlewebmastercentral.blogspot.co.uk/2013/06/backlinks-and-reconsideration-requests.html

In particular note https://support.google.com/webmasters/answer/6635

- Links that are inserted into articles with little coherence

- Forum comments with optimized links in the post or signature,

- Low-quality directory or bookmark site links

Do you see what we mean, those were the approaches used by webmasters before the game changed.

So we can see a clear trend now developing with each Google update:-

- Each update brings a tighter / stricter requirement for uniqueness of content, every page within your site needs to be unique and high quality

- Back links that are from pages that themselves are of low quality are reducing in value, or worse are becoming a problem. These need to be removed.

What to do then?

Well the answer is straightforward.

Content – Only add 100% unique and high quality content to your websites. Remove anything that is duplicated or of low quality – even if you have written it yourself it may have been copied by others, you need to remove this copied material from the Google index either by DCMA notices or removal request, or if need be by rewriting your own pages.

Only build links from high quality sites, and only use unique content for link building, so no spun stuff, no social bookmarking packages etc, no more automation etc.

As your ratio of rich to shallow improves so will your position in the Search Results. Its going to be harder than before, and much more expensive, but with a smaller number of competitors its going to be worth it.

If you are suffering at the moment, reach out to Adrian in our Search Marketing team who is the author of this blog post and our specialist in Panda / Penguin